Published on

May 11, 2023Overview

With the demand for big data and machine learning, this article is here to provide an introduction to implementing Word embedding – Word2Vec algorithm in SparkMLlib.

Digital transformation has resulted in the release of massive amounts of data on a second-by-second basis, and businesses’ servers simply don’t have sufficient power to handle the load. And real-time or streaming data is even more challenging to store and process. When Hadoop came into play, businesses had to use MapReduce, which only works in Java and requires a lot of code to be written. After that, Spark, a live data processing tool that enables live data to be processed and various machine learning and analytics applied on top of it, was released. We will learn about Spark MLlib, an API for working with Spark and running a machine learning model on top of a lot of data.

What’s Spark?

Apache Spark is a free and open-source unified analytics engine for big data. With implicit data parallelism and fault tolerance, Spark offers a programming interface for clusters. One of the most important distributed processing frameworks in the world today is Apache Spark. There are several applications for Spark, including machine learning, streaming data, and graph processing. Python, Scala, Java, and R are among the programming languages that Spark supports.

What’s SparkMLlib?

The Machine Learning Library (MLlib) in Apache Spark is intended to be straightforward, scalable, and simple to integrate with other tools. This means data scientists can concentrate on their data challenges and models rather than on the difficulties associated with distributed data. All because of Spark’s scalability, language compatibility, and speed (such as infrastructure, configurations, and so on). Spark’s MLlib is a scalable machine learning library made up of commonly used learning algorithm and methods, including clustering, regression, classification, dimensionality reduction, collaborative filtering and other optimization methods. With Spark 2.0, the RDD-based APIs contained in the SparkMLlib package are no longer in use. The DataFrame-based API in the spark.ml package has taken over as the main Machine Learning API for Spark.

What’s Word Embedding and Word2Vec?

Word embeddings are learned representations of text that give matching representations to words with the same meaning. In word embedding, Individual words are represented as real-valued vectors in a predetermined vector space. Each word is assigned to a single vector, and the vector values are learned in a manner like a neural network. One of the most well-liked methods for learning word embeddings using shallow neural networks is Word2Vec. Tomas Mikolov created it in 2013 while working at Google. A text corpus serves as the input for Word2Vec, which produces a set of feature vectors, or vectors that represent words in the corpus. Word2Vec converts text into a numerical form that deep neural networks can understand. The Word2Vec objective function makes the embeddings of words with similar contexts identical. These words are therefore quite close together in this vector space.

Why SparkMLlib then?

A collection of tools for data preparation, feature engineering, model training and assessment, and model deployment are included in the Spark MLlib. It also offers tools for interacting with data in formats including CSV, JSON, and Avro. And using Spark MLlib in combination with other Spark libraries might be beneficial. Spark SQL and Spark Streaming are included in order to construct complete machine learning pipelines. Spark MLlib’s focus on usability is one of its standout characteristics. Numerous high-level APIs are available from the library. Because of this, even users with no machine learning experience may easily develop and fine-tune machine learning models. The Spark MLlib API, for instance, offers a variety of preconfigured algorithms. With the right tools, these algorithms may be simply applied to a particular data collection and their performance can be improved.

Pre-requisite

You can refer to the Spark Setup, to install spark on your local machine. For linking PyCharm with spark can be found here.

Implementation

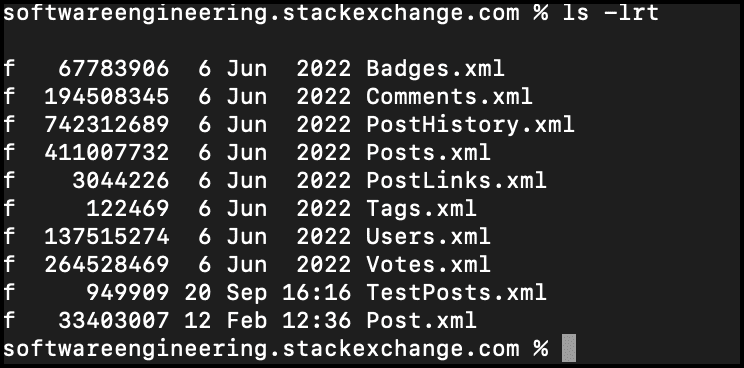

To train the Word2Vec model, Posts.xml from stackexchange archive for software engineering is used in this implementation. Get the data from this link.

Open a new project in PyCharm, set the Python interpreter to version 3.7, then import the PyCharm packages required for Pyspark.

As the data in XML format we need spark-xml_2.12-0.6.0.jar in the spark environment.

Create a Spark Session and read the file as below

from pyspark.sql import SparkSession

spark = SparkSession.builder \

.appName('word2Vec')\

.config("spark.jars", "<path_to_your_jars>/spark-xml_2.12-0.6.0.jar").getOrCreate()df = spark.read \

.format("com.databricks.spark.xml") \

.option("rootTag", "tags") \

.option("rowTag", "row") \

.load("<path_to_your_data>/Post.xml")

Word2Vec transforms a word into a code for further natural language processing or machine learning as it trains a model of Map(String, Vector).

For the model training, the _Body field is used. Since the _Body field includes several undesired characters, symbols, and HTML tags, it is not appropriate for model training.

Following code illustrate data cleaning.

from pyspark.sql.functions import regexp_replace

df = df.withColumn('remove_code', regexp_replace(df._Body, r'<code>(.*?)</code>', ' '))

df = df.withColumn('clean', regexp_replace(df.remove_code, r'<.*?>', ' '))

df = df.withColumn('clean_1', regexp_replace(df.clean, r'\n', ' ')).drop('clean')

df = df.withColumn('clean_2', regexp_replace(df.clean_1, r'[^A-Za-z]', ' ')).drop('clean_1')

df.select('_Body', 'clean_2').show(20, False)

Stop words are frequently used in writing and are ordinary terms like “the,” “and,” “I,” etc. that don’t give the reader any information about the document’s particular subject. To purge the data and find terms that are more uncommon and maybe more related to our research, we can eliminate certain stop words from the text in a specific corpus.

StopWordsRemover method is used to remove the stop words from the clean data. NLTK(Natural Language Toolkits) is used to get list of all the stopwords.

Once the stop words are removed from the data, it is converted to lowercase.

from nltk.corpus import stopwords

import pyspark.sql.functions as F

from pyspark.ml.feature import StopWordsRemover

nltk.download('stopwords')

stop_words = set(stopwords.words('english'))

stopWordsList = list(stop_words)

df = df.withColumn("clean_2", F.lower(df.clean_2))

df = df.withColumn("tokens", F.split("clean_2", "\\s+"))

remover = StopWordsRemover(stopWords=stopWordsList, inputCol="tokens", outputCol="cleaned_token_body")

result = remover.transform(df).select("tokens", "clean_2", F.array_join("cleaned_token_body", " ").alias("stop"))

Once the data is cleaned it is splited into array of words as the Word2Vec model takes the array of words and provides with Map(String, Vector).

After word2Vec.fit() is finished, the model.findSynonyms() function can be used to get the similar words from the trained models.

result_split = result.withColumn("splitted_field", F.split("stop", " "))

result_split = result_split.select("splitted_field")

word2Vec = Word2Vec(vectorSize=100, seed =1,minCount=2, inputCol="splitted_field", outputCol="model")

word2Vec.setMaxIter(1)

model = word2Vec.fit(result_split)

model.findSynonyms("sql", 10).show(10, False)

To further understand the parameters used to initialise this model, let’s explore even deeper now.

vectorSize – Specifies the dimensions of the embedding vector.

seed – A value chosen at random to begin the initialisation of the network weights. Any random number will be used each time the model is fitted if you set it to “None.” If seed is not set, the model results might differ. As a result, change it to any value you choose to train the model in a consistent manner.

minCount – The bare minimum of tokens that must present in order for the word2vec model to include them in its vocabulary.

Finally, save() function is use to save the trained model.

model.save(‘<path_on_your_system>/word2vec.model’)

We can load the saved model in pyspark, So, we don’t need to trained the model every-time.

from pyspark.ml.feature import Word2VecModel

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('LoadSavedModel').getOrCreate()

loadedModel = Word2VecModel.load('<path_to_your_saved_model>/word2vec.model')

loadedModel.findSynonyms("bigdata", 10).show(10, Fals